Get Started

Requirements

Terraform CLI (>=1.4) installed

AWS CLI installed

AWS account and associated credentials that allow you to create resources

Configure the AWS provider to authenticate with your AWS credentials

Censys.io account

Perform the following actions to deploy the necessary resources.

Get UNX-OBP Artifacts

Download and extract the contents of the UNX-OBP package.

Build Lambda Deployment Packages

Change directory to the “scripts” folder, run:

./update_lambda_deployment_packages.shThis will build fresh Lambda deployment packages with the latest required Python libraries and move them into the appropriate terraform directory.

You can just run this any time you update the Lambda function code.

Note, if you add any other non-standard Python libraries, you will need to modify this bash script to include those for the relevant function(s), or package it manually.

Create and Store API Credentials in Secrets Manager

Censys recently transitioned to their new Censys Platform - as such, Free tier accounts no longer have API access. Skip this step unless you continue to have Censys Legacy Search API access.

You need to create a secret for the Censys.io API.

See the Create an AWS Secrets Manager secret guide for the full up to date process of interacting with AWS Secrets Manager. For our process, note the following:

Select “Other type of secret” for Secret Type.

The secret has two key/value pairs. The keys in each secret must be named “uid” (referring to the user ID, username, or API key for the account), and “skey” (referring the secret key for the account API). Find these values in your account settings in the Censys.io web interface.

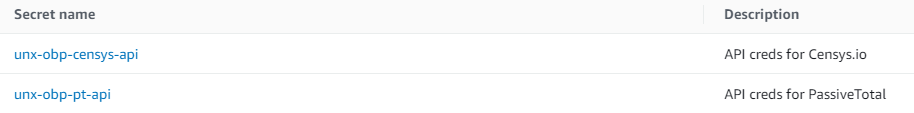

The Censys.io secret name must be “unx-obp-censys-api”. Your Secrets Manager console should have an entry like below:

As regards API quotas, it is recommended to have an enterprise-level subscription to these services, but community/free accounts should work fine if you’re just trying this out.

Importantly, stuff will continue to function even if one or both API quotas are reached, but the output will be less useful. See Error Handling and Monitoring for more information about discovering when enrichment errors occur.

Update Terraform Variables

Change directory to the “terraform” folder.

By default, resources will be deployed to the us-east-1 AWS region, but you can adjust the “region” variable as necessary.

→ Create an EC2 Key Pair in the AWS region where you will deploy, and store the private key locally. Use the AWS Management Console or see below command line example.

Example using AWS CLI:

aws ec2 create-key-pair --key-name aws --query 'KeyMaterial' --output text > aws.pem→ Update the “key_pair_name” variable to match your “–key-name” value, excluding the “.pem” or “.ppk” file extension.

→ Restrict possible connections to the EC2 instance by updating the “my_client_pubnets” variable to include the client IP address(es) or CIDR block(s) from which you will connect.

→ Update the “environment”, “project”, and “owner” variables as appropriate for your deployment.

You can modify the variables.tf file directly, or you can set these values separately by creating a terraform.tfvars file at the root of the terraform folder:

An example terraform.tfvars:

key_pair_name = "aws"

my_client_pubnets = [

"1.2.3.0/24",

"4.5.6.0/24",

]

environment = "test"

project = "unx-obp"

owner = "CHANGEME"If you need to deploy to AWS GovCloud (US), you must update the following:

The “region” variable

The “partition” variable to “aws-us-gov”

The “aws_ami” data resource in config.tf

Comment out the “owners” line referring to “amazon commercial”

Uncomment the “owners” line referring to “aws govlcoud”

Deploy Infrastructure with Terraform

Make sure appropriate AWS credentials are available to the shell. Consult Configure the AWS provider to authenticate with your AWS credentials. It is recommended to start a screen session and set the appropriate environment variables.

In the “terraform” folder, run:

terraform init

terraform validate

terraform applyReview the plan, type ‘yes’ and hit Enter.

This will deploy the following:

An entirely new VPC, utilizing Availability Zones a and b in the selected region

Internet gateway

2 public subnets, 2 private subnets, 2 NAT gateways

S3 and DynamoDB VPC Endpoints

OpenSearch / Elasticsearch (single node)

EC2 instance with NiFi (single node) and Flask server (started in screen session)

All necessary resources for IAM roles/policies, security groups, S3, DynamoDB, Lambda, Step Functions, SQS, and EC2 Auto Scaling for baseline regeneration

Wait for it to complete. It can take about 20-30 minutes. Once the Terraform apply is complete, you should give about five extra minutes for the EC2 instance user data (start up) script to complete.

Make note of the Terraform outputs. You can always run “terraform output” to get these values. Do not include the quotes when you copy/paste these elsewhere.

SSH to EC2 Instance with SOCKS Configured

Only TCP/22/SSH is exposed on the EC2 instance.

You’ll access the NiFi, Dashboards, and UNX-OBP List Manager Web UIs through the SSH session.

PuTTY

Connection > SSH > Tunnels - Source port: 28080; Destination: leave blank; Dynamic IPv4 → Add

Connection > SSH > Auth > Credentials - Browse to add the private key file that you downloaded in Step 1.

Connect to the EC2 instance using the “nifi_instance_public_dns” Terraform output as the Host Name. Login as “ec2-user”.

Command Line

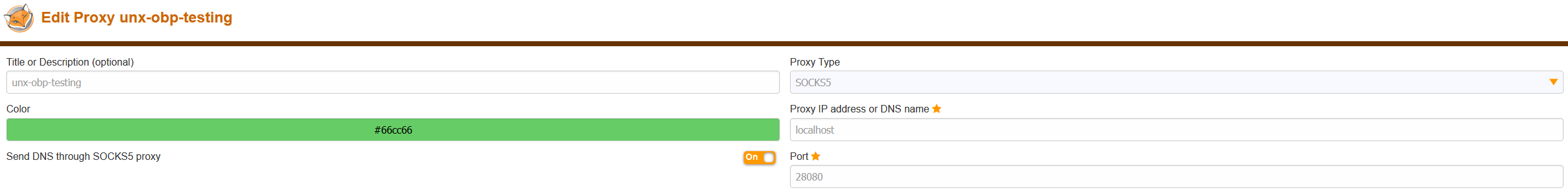

ssh -i key_pair_name.pem -D28080 ec2-user@nifi_instance_public_dnsSetup and enable a SOCKS5 proxy for your browser (e.g., using Foxy Proxy in Firefox):

In your browser you should be able to hit the following:

NiFI Web UI at `https://unx-obp-nifi/nifi

Dashboards Web UI - see the “search_dashboards_endpoint” Terraform output

UNX-OBP List Manager Web UI at

http://unx-obp-listmgr/

Prepare NiFi

Download the updated NiFi Template file, unx-obp-public-nifi-dataflow.xml, from the recently provisioned NiFi S3 bucket.

Login to NiFi Web UI (credentials should have displayed when you connected to the EC2 instance). If you don’t see it, cat /etc/motd.

Upload the template file. Right click the canvas → Upload template.

Load the template onto the canvas by dragging the Template icon in the top navigation bar onto the canvas.

Enable all associated Controller Services. Right click the canvas → Enable all controller services.

Wait for a refresh, or right click the canvas and Refresh.

Confirm no processors are in an invalid state (the triangle with exclamation point should be grey - see NiFi User Interface for more information).

Start the entire imported dataflow via the Operate Palette (on the left side, using the Play icon/button).

Generate Traffic

From the EC2 instance, sudo su - to root and use the generate_todays_protocol_traffic.py script to generate pseudo-protocol traffic from the provided PSV file.

You can generate traffic in bulk to a local SiLK binary file, which you’ll then move into the “input” directory. NiFi will automatically pick up and process files in that directory and send records on to the SQS queue.

python3 generate_todays_protocol_traffic.py -p smb -f smb-base-traffic-agg-updated.psv \

--silk && mv *.bin inputAlternatively, you can generate traffic in real time directly to SQS, bypassing NiFi all together.

python3 generate_todays_protocol_traffic.py -p smb -f smb-base-traffic-agg-updated.psv \

-q <sqs_incoming_queue_url>Refresh the canvas and review any NiFi processors or process groups for basic stats and any errors.

Review alert outputs via the “UNX-OBP Alerts Overview” dashboard. Review allowlisted hits via the “UNX-OBP Allowlisted Overview” dashboard.

If you are not seeing anything populate the dashboards, see Error Handling and Monitoring.

Run the script with -h to see further options.